.png)

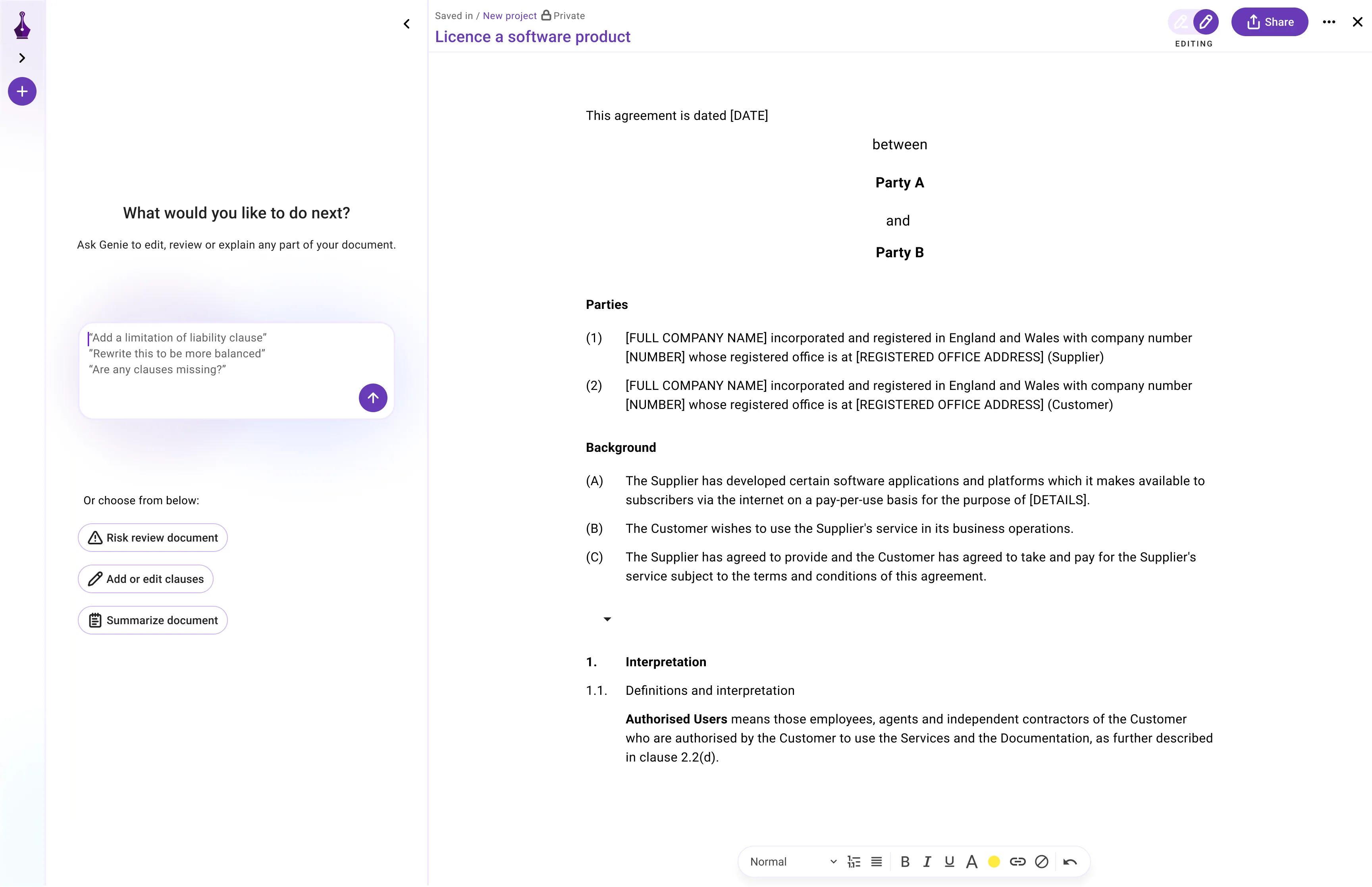

Note: This article is just one of 60+ sections from our full report titled: The 2024 Legal AI Retrospective - Key Lessons from the Past Year. Please download the full report to check any citations.

Challenge: AI Hallucinations and False Information

AI models can sometimes generate false or nonsensical information, known as "hallucinations."

In 2024, the National Center for State Courts recommended that judicial officers and lawyers must be trained to critically evaluate AI-generated content and not blindly trust its outputs.[129]

In a 2024 survey conducted by LexisNexis, concerns over AI hallucinations were cited as the biggest hurdle to adoption of generative AI in law firms (55%).[130]

.png)